Cloudant PouchDB sync (not) CORS error

The other day, I spent more time than I should have looing into an issue syncing between a local PouchDB instance in Firefox and a remote CouchDB hosted by IBM Cloudant. The error messages were less than helpful, even outright misleading. Google was of little help. I have the vague feeling that I ran into this before, so this time I’m writing it down.

Cloudant provides CouchDB hosting. This is very convenient, if like me, you loathe dealing with hosting infrastructure. They even have a free tier, which gets you a small CouchDB server for free. The free tier is limited to 1 GB storage, 20 reads/second, 5 writes/second. This is plenty for small personal projects that don’t have much data, are not particularly sensitive, and just need an always-on backend to sync with.

Even in an offline-first browser application with PouchDB, you might want to synchronise data before rendering most of the UI, if possible. This avoids users of the application being greeted with a blank screen or less than up to date data. The naive way of doing one-shot downwards replication is doing something like this:

await PouchDB.replicate(remoteDB, localDB);At first, this might fail because you haven’t set up CORS. So you go to Cloudant settings, enable CORS for the relevant domains, or the wildcard domain (not recommended). You try again, and everything is fine.

After a while, you accumulate a couple of hundred documents in your DB. In new browser instances (new device, wiped DB, maybe incognito mode), it will start to fail. In Firefox, this runs for a bit, then fails with error messages seemingly blaming CORS settings.

The weird thing is, you’re sure CORS is set up correctly. In fact, the synchronisation kind of works, but only a couple of documents at a time.

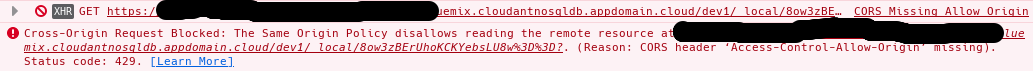

After much head scratching, I finally, more or less by accident, had the Cloudant DB load page open while trying to sync. Turns out I was exceeding the request limit. What I got back from Cloudant where HTTP 429 Too Many Requests responses. The error message kind of says it, it’s just drowned out by the CORS stuff.

I’m still not really sure who’s to blame here.

Cloudant are not setting the Access-Control-Allow-Origin header on 429 responses.

That’s inconvenient, but I guess it’s understandable since the rate limiting responses should naturally be as cheap as possible.

Also, their load graphs don’t show historical data.

You have to have them open to spot the problem as it occurs.

Still, they’re providing me with a very convenient, free as in beer service, so I really can’t complain.

Firefox tries to be helpful and links to CORS documentation.

Unfortunately it’s a bit misleading.

It says “Cross-Origin Request Blocked”, but in fact the request was made just fine, but happened to return a 429 response.

(Maybe the response doesn’t make it to JS, because of the missing A-C-A-O header, I don’t know.)

They provide me with a free as in speech browser, so I can’t really can’t complain.

What to do about it? I can think of three bad options and one good option:

Retry in a loop.

This is obviously not the right thing to do. But it makes some progress each time, so… I’m not proud to admit it, but I actually briefly tried this while figuring out what’s going on.

Make one big request.

This works due to a quirk in Cloudant’s accounting. They reject requests if the previous requests have exceeded the 20 reads/second window. If you bump the PouchDB limits to make one request for all documents at once, it just slips by.

Make many small requests.

PouchDB has options for number of concurrent replication batches and batch size. Setting them to very, very small values avoids the rate limit at the cost of making way too many requests. You basically replace being explicitly rate limited by Cloudant with being implicitly rate limited by network latency.

await PouchDB.replicate(remoteDB, localDB, { batch_size: 5, batches_limit: 1 });Make PouchDB aware of rate limits.

This is of course the correct thing to do. I haven’t done it, at least not yet. PouchDB already has code for continuous background replication, which can deal with errors and has a configurable retry policy. It might not be too hard to adapt this to one-shot replication.

I’m currently doing 3 and feeling bad about it. At the moment, I’m the only user of this application and I don’t typically use it on new machines. This definitely needs fixing, though.